Presenting a fake ID is a tried-and-true fraud method for fraudsters, money launderers, human traffickers, and criminals. These bad actors pretended to be someone else at the time of account opening or during another verification point in the customer journey.

Account openings and high-value transactions are increasingly accomplished digitally, and the risk of fraud continues with fake IDs. Even when account opening shifted to the web, fraudsters typically still use a physical, fake ID, or a digital/paper replica.

That is, until recently. Artificial intelligence (AI) has allowed bad actors to shift from low-volume fake IDs to deep fakes created solely for criminal purposes at a fraction of the cost of old-school fake IDs. With the high volume of personally identifiable information (PII) and biometric data available on the dark web or public social media accounts, AI engines can commingle genuine human information with AI-generated information and IDs. This is making the separation of good versus bad even more difficult. And unfortunately, deep fakes are currently here to stay.

In the identity verification world, the question of how you introduce a deep fake ID into a decisioning workflow isn’t a new one. Differentiating between a good customer uploading a genuine ID and a bad actor uploading a fake– and now a deep fake– is a core function.

Injection attacks are one fraud tactic bad actors are using to submit fakes. With the AI factors listed above, these attacks are gaining steam, and they can be difficult to stop– but not impossible.

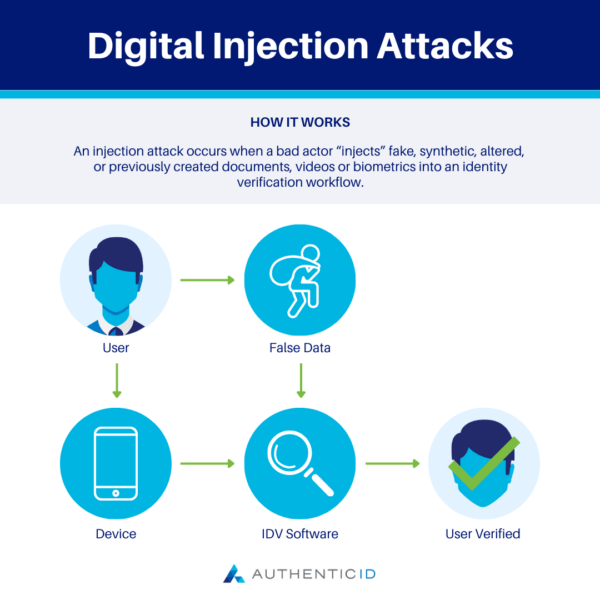

What Are Injection Attacks?

An injection attack occurs when a bad actor “injects” fake, synthetic, altered, or previously created documents, videos or biometrics into an identity verification workflow. For example, a bad actor takes a photo of an ID from their device and replaces the photo that was captured using the traditional capture application on the phone or computer with a fake or synthetic document. By replacing the image in the capture workflow, they are simulating the traditional action of “uploading” an image into the workflow, “injecting” bad data into a workflow to bypass traditional fraud detection and identity verification methods.

Think of it this way. If you were able to upload a photo from all your photos on your computer instead of using the camera capture, then you would be able to choose any photo you wanted. Injection is the same. The injector chooses which fake ID they want to use, and then they can “upload” by replacing the captured photo.

With this act, the fraud has started. Even scarier, a bad actor can automate this entire process and submit fakes while watching their bank accounts and eating popcorn.

Injection attacks can occur several ways, including:

- Deepfake video injection: By using deepfake videos, bad actors can inject those deepfakes into an authentication workflow to mimic a legitimate customer or user any bypass a facial recognition system (this is where liveness detection is especially useful!).

- Voice authentication bypass/injection attack: New, AI-based voice synthesis technology can allow fraudsters to spoof and synthesize someone’s voice and voice patterns, allowing them to more easily bypass voice-activated authentication systems.

- Biometric-based injection attack: From the use of artificial fingerprints to even forging vein patterns, these convincing fakes can bypass many authentication platforms.

Where and How Can Injection Attacks Be Stopped?

Injection attacks can be difficult to stop, and there is no single silver bullet to stop an injection attack. Stopping attacks requires multiple silver bullets that are introduced at a rate faster than the most sophisticated fraudster can dodge.

Criminals have sales goals and quotas to fill. So, as we introduce a bullet, they introduce a way to dodge the bullet. To stop injection attacks, your silver bullets must have a multi-faceted approach:

- Stop them at the source.

- Stop them after the source and before they make any money.

- Discover when your bullet misses and respond.

Stopping Injection Attacks at the Source

Identity Verification (IDV) experts have substantial experience analyzing how fraudsters and injectors behave when capturing photos of identity documents. We can use this information to identify behavioral mistakes and the resulting anomalies to detect fraud. These behaviors follow the principle of what, where, and when.

What

The what is the document the fraudsters submit during a workflow. Here is where you can often see fraudsters make mistakes when they select what images to inject. An example: passports don’t have a back, but bad actors often don’t remember this, and they’ll submit a document that can be marked as fake quickly. Because of a long history with fraudsters’ bad behavior, it’s possible to identify the “symptoms” of a potential mistake and craft intelligent algorithms to identify, risk assess, and stop them.

The risk of the what is false rejections. Sometimes a genuine customer makes a similar mistake and could be identified as a fraudster, meaning that your system must take steps to differentiate between good and bad, and sometimes a good person may have to submit twice. Visual clues are crucial to tracking the what, especially if a bad actor is using a virtual camera.

Where

Detecting the where of these attacks– the location– isn’t easy. Fraudsters know that by declining to share location settings they are preventing location-based algorithms from working. While this is good for them, there’s a fraud-stopping catch. That’s right- fraud fighters like us have developed some techniques that allow us to find the anomalies in where images are being submitted. In a world of easy vacations and business travel, this is not a small feat.

When

Then when of an attack is important. Fraudsters often committed fraud at off hours, like 2am. But fraudsters know we’re on to them, so the smart ones commit fraud during peak hours. They randomize their attacks. But their behaviors and trends don’t go unnoticed either. Bad actors trying to trick an analysis system can be stopped as well, if you know when to look.

Stopping injections from the source involves multiple silver bullets all aimed in the direction of the injectors. Injectors and fraudsters adopt certain behaviors and mistakes that a cutting-edge identity verification solution can detect and defend against. While no solution will stop 100 percent of mistakes (if anyone tells you this, you should walk away from that vendor immediately!), a multi-faceted approach can defend against most fakes and deep fake attacks- and the techniques that are yet to come.

What, When, When is the first step in the approach to stopping injection attacks. Next, let’s explore the “Stop them after the source and before they make any money” phase.

Stopping Injection Attacks After They Occur – But Before Any Money is Lost

In this stage of stopping fraudsters and injectors, the Source-based identifiers didn’t stop the injection attack– even some fraudsters will dodge the bullets. For solution providers that just offer injection solutions, this is where the rubber will meet your “loss road.” If they let an injection through but have nothing to stop a deep fake using an injection, then you will have allowed that fraudster to meet their sales quota.

Probabilistic Visual Algorithms

In some cases, with fraud attacks and especially injection attacks, a face or a document is too perfect to be real. That’s where probabilistic visual algorithms come into play. We’re no stranger to designing algorithms that detect fake documents; in fact, the team at AuthenticID has designed such algorithms to detect fake documents without using traditional holograms or UV, among other features. This is important because the shift to mobile devices for identity verification rendered traditional security tests nearly useless. It was crucial to develop visual techniques that stopped fakes without having to rely on those traditional features. This is an ongoing process, with constant expansion and perfection of these techniques to stop over 98% of fake IDs.

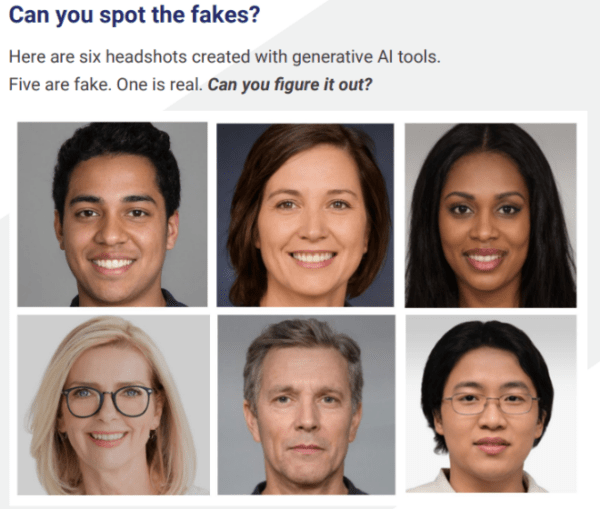

Deep fakes have all but eliminated the visual errors that fraudsters have made when they create a typical fake document. Bad actors have used machine learning and AI to create fake documents of incredible quality– they could fool 95 of 100 fraud professionals. That’s why AI is required to fight these AI-generated documents. Only by leveraging AI, ML, and substantial professional expertise can we create solutions to find mistakes used by deep fake-enabled fraudsters. Visual errors can become a goldmine of algorithms.

Deterministic Algorithms

Deep fakes are not just visually excellent. These bad actors have found ways to create PII that are astoundingly accurate.

But not all accuracy is created equal. Understanding how government authorities create the information on documents is critical. At AuthenticID, we’ve used deterministic PII techniques for a long time to identify fake documents. If we see it once, it can be stopped twice. What has been a pleasant surprise is that AI can also make these same mistakes as the traditional fraudster. Even simplistic factors like eye color or expiration date can be erroneous and detected by a strong platform.

What Happens When A Bad Actor Can Dodge All Our Fraud Fighting Efforts?

Once again, if anyone tells you they have a 100% effective solution to stop fraud: back away safely and quickly.

No human or solution can detect all fraud. Solutions can just reduce that number to an absolute minimum. So, when a fraud gets through your initial checks, you must ask yourself, does my solution provider rapidly resolve these misses? Sure, adding a missed Document Number to a watchlist is a quick and easy way. Any solution should be able to handle that. But for those smart AI fraudsters who change PII, how do you stop them?

These bad actors can be stopped by a provider using multi biographic and biometric watchlists that make it extremely difficult for fraudsters to hide what they look like, or who they are pretending to be. In short, for one client we stopped 50,000 repeat offenders in 12 months and not just using a single document number watchlist.

How? It’s about the ability to identify exceptions, and then design and deploy new algorithms rapidly. A fraud system should be designed to stop group fraud attacks in an hour while a permanent solution is designed. It also takes vision to identify what the next change fraudsters will make. Internal and external expertise will continue to discover new ways to use AI to fight AI.

There are many new, emerging types of fraud attacks: injection attacks, deep fakes, AI-enabled fraud. But those of us who have been in the identity verification game for a long time recognize that these trends are just the adaptation of the fake ID market that has existed for decades. Where once we stopped fakes with humans, then scanners, we were forced to adopt to use of iPhones and mass adoption of mobile phones as part of our everyday life when cell phones became the capture mechanism of choice. Then bad actors could buy terrific fakes online and download them in a minute. Now AI has become involved and is creating those amazing deep fakes which can be injected around the phone capture workflows. It’s a new era of the same trajectory.

2024 is just a new phase in the evolution of good versus bad. Experience in adapting to change is what makes solutions and defenses against injection attacks and deep fakes solutions so effective. Continuous monitoring is key to identify attacks that haven’t been stopped and respond accordingly.

Deep fakes are being stopped, and we’re adapting our techniques to stop many injection attacks. And even today, we’re still developing new methods to stop the next wave of changes to deep fake and injections. It’s an evolution: adapt or become redundant.

Learn More About the Algorithms That Can Stop Deep Fake Injection Attack Fraud

While the threat of deep fakes and deep fake injection attack surges, AuthenticID continues to innovate to stop fraudsters. Contact us to learn more about how your organization can meet the evolving challenge of fraud.